We test at branch level by design. Changes are scoped, environments are isolated, and validation is targeted. We don’t run full regression unless the scope demands it because unnecessary regression slows delivery without increasing confidence. That system works when the environment behaves predictably and state is clean.

The problems begin when the same change reaches staging. A defect is logged with clear steps and expected behavior. Another tester verifies it and sees something slightly different. Nothing has been redeployed. The branch didn’t change. Yet the outcome shifts between testers. That shift is not random. It’s usually the moment caching becomes visible.

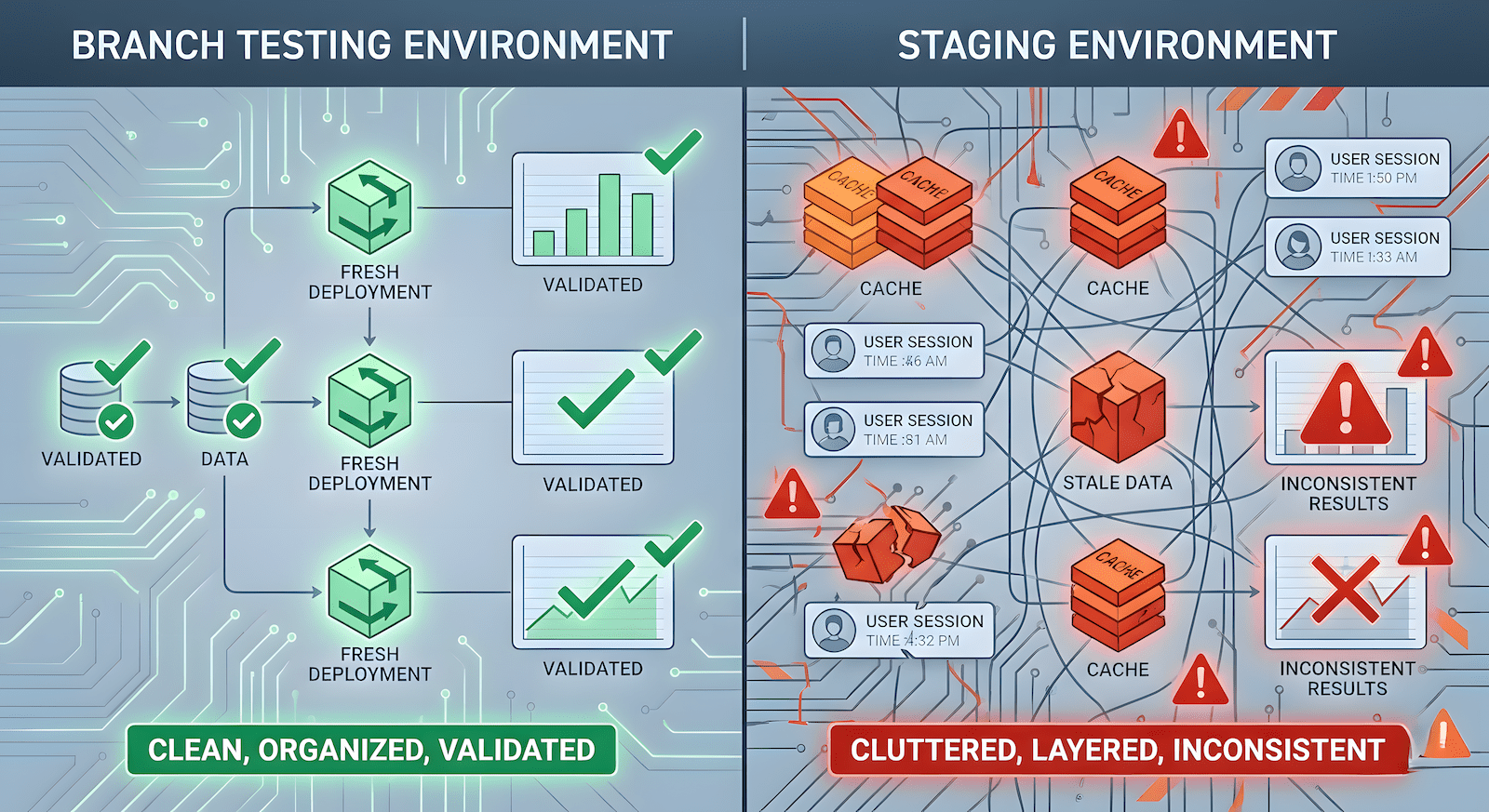

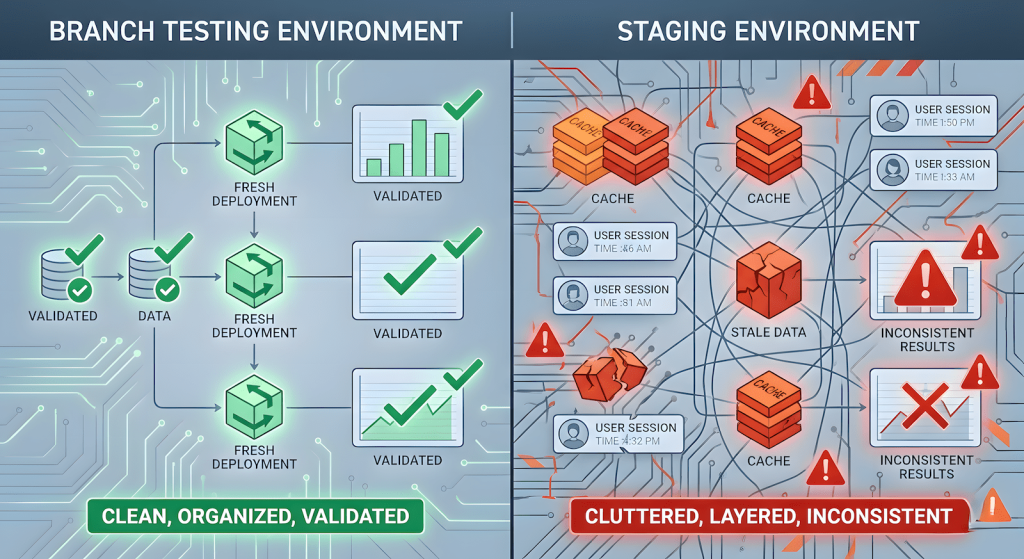

Why Branch Feels Stable and Staging Doesn’t

Branch-level testing keeps environments clean because they’re short-lived and narrowly used. They’re spun up for a specific scope, validated, and discarded. There’s minimal historical state, fewer long-lived sessions, and little accumulated residue from previous deployments. What you deploy is what you validate.

Staging is different. It persists. Multiple testers log in with different roles. Sessions remain active for longer periods. Feature flags are toggled. Hotfixes are applied. Caching is active and often configured to mirror production behavior. Over time, staging accumulates invisible state. That state isn’t obvious from the UI, but it affects behavior. Two testers can hit the same URL and interact with slightly different client conditions. That’s where reproducibility begins to drift.

The Drift Doesn’t Look Like a Bug

Caching rarely produces obvious failures. It produces inconsistency. A feature flag changes server-side, but one browser continues to render the previous configuration. An API response is updated, yet another client serves stale data. A fix is deployed successfully, but the UI still reflects old behavior.

No single observation looks catastrophic. However, the pattern becomes clear when verification results vary without code changes. Once the same steps no longer produce the same outcome, determinism is compromised. Determinism is the foundation of validation. Without it, every defect discussion becomes conditional.

Why Clearing Cache Isn’t a Solution

The reflex response is often to clear cache. That action may align behavior temporarily, but it changes the test conditions. If a hard refresh alters the outcome, the system wasn’t stable beforehand. The goal of QA is not to confirm that the application works after manual correction. The goal is to validate predictable behavior under normal interaction.

Users don’t consistently reset their environment before using the system. If inconsistent client state leads to inconsistent behavior, that inconsistency belongs in the validation conversation. When clearing cache becomes a prerequisite for alignment between testers, validation shifts from testing logic to managing environment state.

When Defect Reports Start Evolving

The impact becomes visible in defect handling. A defect is logged with clear reproduction steps. Another tester verifies it and observes a slightly different result. The steps are adjusted. Expected behavior is rewritten. The description evolves even though the code didn’t change.

When reproduction depends on browser history or client cache state, defect reports lose stability. Instead of isolating application logic, time is spent synchronizing environments. That overhead is subtle but cumulative. Over time, verification discussions shift from identifying root cause to reconciling inconsistent observations. Confidence erodes quietly because evidence is no longer uniform.

Fix Verification Under Caching

Caching also interferes with fix validation. A hotfix is deployed. QA retests and still observes the issue. Logs confirm that the new code is active. Someone refreshes the page, and the behavior changes. The fix was correct, but client state didn’t invalidate automatically.

Time is spent rechecking what has already been corrected. This introduces friction in delivery cycles and extends verification time unnecessarily. The issue isn’t whether caching exists. The issue is whether invalidation is predictable and visible.

What We Check Instead of Arguing

When inconsistent behavior appears and caching is suspected, debate doesn’t help. Structural checks do. We verify the following:

- Active build visibility – The build version or release identifier is visible at runtime so we can confirm which code is actually being executed.

- Cache header inspection – Dynamic or identity-based endpoints are inspected to ensure responses aren’t being cached generically when they depend on role or configuration.

- Forced refresh comparison – Behavior is compared with and without a forced refresh to determine whether client state alters outcomes.

- Session isolation – We log in as different users consecutively and confirm that permissions and UI state reset properly.

- Deploy-triggered invalidation – Deployments trigger predictable invalidation of static assets and configuration so fixes propagate without manual intervention.

- Cross-tester reproduction – Reproduction can occur across testers without requiring cache clearing or special browser settings.

These checks focus on determinism rather than effort. They measure whether the environment itself is stable.

The Real Cost

Caching improves performance at the client level. However, unmanaged caching introduces invisible state that interferes with reproducibility. That interference doesn’t usually cause catastrophic failures. It creates small inconsistencies.

Small inconsistencies slow triage. They stretch verification time. They create hesitation during release discussions. Over time, that hesitation becomes normal because outcomes are no longer strictly predictable. QA exists to protect certainty in delivery. If the environment allows hidden client state to influence behavior unpredictably, certainty weakens. Performance optimization shouldn’t compromise validation integrity.

Caching isn’t inherently wrong. Unmanaged caching is. If determinism isn’t preserved across environments, validation shifts from confident verification to conditional acceptance. And conditional acceptance isn’t what QA is meant to provide.